By 1992, Duplan and Buckle weren’t just theorizing about AI and automation – they were implementing these concepts through the IT Environmental Database Management System (ITEMS). This in-house system, developed at International Technology Corp. (IT), was a pioneering attempt to bring order to the chaos of environmental datasets. While primitive by today’s standards, ITEMS encapsulated many features of an “expert system” for environmental management, blending database technology with automation and some rule-based intelligence. It effectively put into practice several of the ideas hinted at in the visionary predictions of 1989. Let’s break down what ITEMS did and why it was so ahead of its time:

- Central Relational Database (Oracle RDBMS): At the core of ITEMS was a robust relational database to store all data in structured tables. The system was built using the Oracle relational database management system and programmed in C – cutting-edge choices for the early 90s. This central database meant that soil data, water data, well information, chemical analyses, and other relevant data were all stored in one place, rather than being scattered across disparate files. Consistency and integrity of data were enforced, which is essential when dealing with tens of thousands of measurements.

- User-Friendly Data Entry and Retrieval: ITEMS provided a menu-driven interface with standard electronic forms for data entry and retrieval. Geologists could enter borehole log information into electronic forms that mirrored paper logs, and chemists could input lab results in a structured manner. These forms weren’t just dumb data-entry screens – they had validation rules and “triggers” (small pieces of code that run on specific actions) to catch errors or prompt for related information. This was an early form of automation designed to improve data quality; for example, if a user attempted to enter a contaminant name, the system could auto-fill the associated chemical code or prompt for the required units. For retrieval, the same forms could be used to query data – an intuitive approach that meant staff didn’t need to be database experts to ask complex questions.

- Automated Regulatory Setup: One innovative feature was a built-in library of regulatory standards and chemical information. ITEMS had a secondary database containing over 2,500 substances, including their names, CAS registry numbers, physical properties, and regulatory limits. Users can populate a project’s database by simply selecting chemicals of concern from prepared lists, such as all EPA priority pollutants or all constituents on the RCRA groundwater monitoring list. The system would then automatically load the details of those chemicals into the project. This saved enormous time and avoided transcription errors – there was no need to type out every pollutant name and standard manually. It also meant that when printing reports, the system could automatically flag which results exceeded regulatory limits by comparing them against its internal standards library. In short, ITEMS carried some built-in expertise in environmental regulations.

- Seamless Integration with Other Software (Automation of Analysis): Perhaps the most lauded capability of ITEMS was its ability to transfer data between the database and graphical software without requiring human intervention. At a time when most people would have to manually export a table of numbers and then import it into a graphing program, ITEMS automated this process. It interfaced directly with commercially available tools, such as AutoCAD, SURFER, and GRAPHER. For example, once all the well coordinates and contaminant concentrations were in the database, an engineer could request a contour map of groundwater pollution. ITEMS would launch SURFER or QuickSurf in the background, feed it the data, and automatically generate a two- or three-dimensional concentration contour plot. Similarly, well-constructed diagrams and boring logs could be drawn directly in AutoCAD from the stored data, ensuring that figures in reports match the database values exactly. This level of automation eliminated countless hours of manual graphing and drafting. It also reduced errors that creep in when transferring data by hand. The integration even extended to Geographic Information Systems: ITEMS could work with a GIS (GEO/SQL-5) to visualize data spatially, again a forward-looking feature for the early 90s.

- Electronic Data Uploads and Validation: The system was designed to directly ingest laboratory results files, “downloading the data from the analytical laboratory directly into ITEMS,” which was described as a significant time-saver. This is a clear echo of the 1989 prediction about automating the process to speed up data availability. Instead of waiting for printed lab reports and then re-typing them, the lab could send a digital file (via modem or floppy disk in those days), which ITEMS would parse and load into the database. Along with this came automated quality checks – for instance, ITEMS would automatically generate backup quality assurance data for each chemical record and could enforce that required QA/QC fields were filled. By having the computer handle data transfer and some quality assurance, the turnaround time from sampling to analysis to actionable data was shortened, supporting more real-time decision-making.

- Advanced Data Analysis and Reporting: ITEMS greatly simplified the production of outputs needed for decision-makers and regulators. It could generate “tables for submission to the regulators” in any format and order required, pulling only the parameters of interest. Users could easily filter results (e.g., show only values above a cleanup level, or only the latest result from each well) by setting criteria in the reporting menus. In essence, it was an early forerunner of modern environmental data reporting tools, which allow dynamic slicing and dicing of data. Moreover, ITEMS included a sophisticated statistical analysis module. It implemented the EPA’s latest guidance on statistical analysis of groundwater monitoring data (from 1989) and offered tests to detect trends or differences between wells over time. For example, it could apply the Mann-Kendall test to determine if contamination levels in a well were trending upward or downward over time or generate control charts to monitor a well against its historical variability. These tasks, if performed manually, require considerable expertise and labor. By automating them, ITEMS helped environmental scientists focus on interpreting results rather than calculating them. In a way, this hints at the potential of AI by augmenting human decision-making: the system could crunch the numbers and flag statistically significant changes, effectively saying, “pay attention to these wells or these periods” – a rudimentary form of what we’d now call analytics.

Overall, ITEMS was a manifestation of early 1990s AI and automation in environmental management. It had no humanoid robots or self-aware algorithms. Still, it didn’t need them – the intelligence was in how it streamlined workflows and encoded expert knowledge, such as regulatory standards and data validation rules, into the software. Duplan and Buckle demonstrated with ITEMS that many tasks that used to eat up scientists’ time could be offloaded to computers, yielding faster and potentially better decisions.

It’s essential to note that while ITEMS itself may be obsolete now (a product of its hardware and software era), its legacy is profound. Many environmental software solutions that followed – including modern cloud platforms – borrow the same concepts, such as central databases, integrated GIS/graphics, electronic data capture, and automated compliance checking. In that sense, the authors’ predictions about expert systems did materialize by the late 1990s and 2000s, and the industry had widely adopted environmental information management systems that were direct spiritual successors of ITEMS. The following section will explore how these early ideas laid the groundwork for contemporary platforms, with a particular focus on how Locus Technologies has carried this torch forward, leveraging today’s AI and significant data capabilities.

Contemporary Relevance and Locus Technologies’ Role

Fast forward to the 2020s, and we find ourselves in a world where the challenges identified in 1989 are even more pronounced, but the tools at our disposal are exponentially more powerful. Environmental data has truly entered the significant data era: sensors in the field stream readings 24/7, laboratories output electronic results by the thousands, and decades’ worth of monitoring data can be warehoused in the cloud. The question is, have Duplan’s and Buckle’s early predictions about AI, automation, and integrated data systems come true? The answer, essentially, is yes – and one need look no further than companies like Locus Technologies to see these concepts in action.

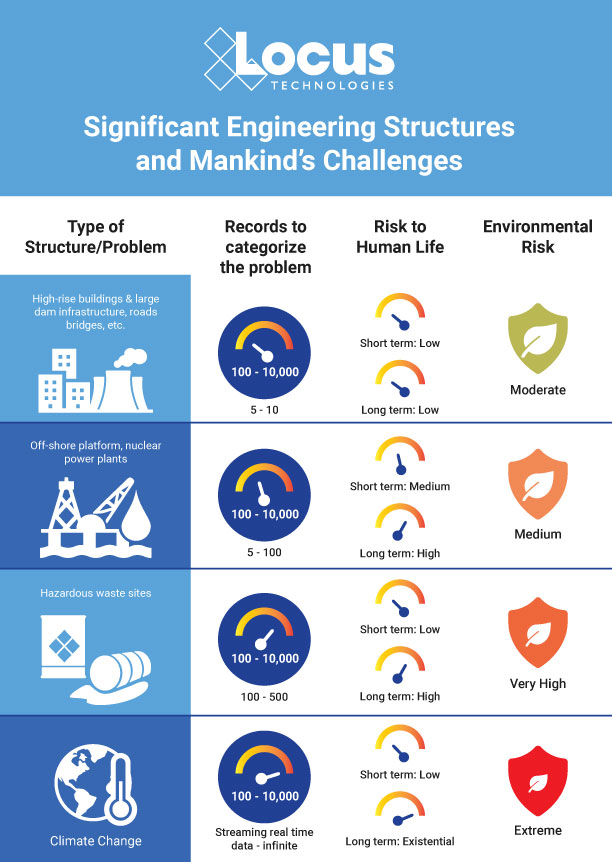

Figure 2: Long-term environmental risks

From Vision to Platform

Locus Technologies, founded in the late 1990s, was co-founded by Dr. Neno Duplan. In many ways, Locus was built on the very vision outlined in those 1989 and 1992 articles which he co-authored: that efficient, cloud-based software can significantly improve environmental management. Over the past two decades, Locus has developed a cloud-based software suite, notably its Environmental Information Management (EIM) system, Locus EIM, and the Locus Platform (LP), as well as other applications, serving industries and government agencies worldwide. This platform approach means that all of a client’s environmental data – across air, water, soil, waste, and other media – can reside in one secure online system, much like ITEMS consolidated data on a local server, but now on a globally accessible scale.

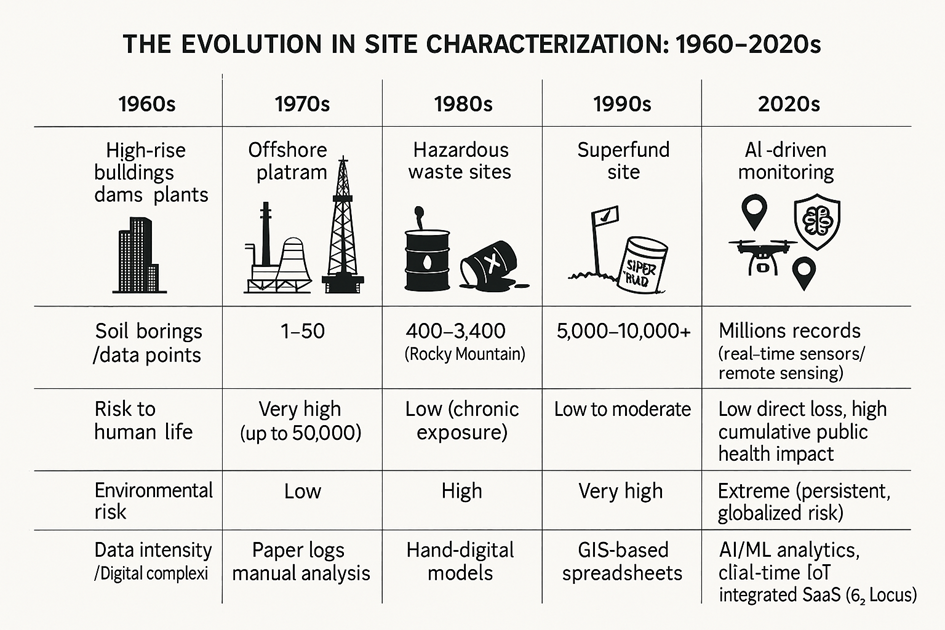

Figure 3: Evolution of the site characterization process

Consider the case of Los Alamos National Laboratory (LANL), a storied U.S. Department of Energy laboratory with a complex environmental footprint. In 2011, LANL selected Locus software to centralize and manage decades of monitoring and remediation data for its 37-square-mile site. The result was a single repository, hosted in Locus’s cloud, that replaced numerous independent databases and spreadsheets maintained by different teams. By pooling all this information, the lab reported significant cost savings, on the order of $15 million by 2015, due to improved efficiency and the elimination of redundant systems. Even more impressively, this database, called Intellus, contains over 40 million records of environmental data from the Los Alamos area. For perspective, that’s several orders of magnitude more data than the Rocky Mountain Arsenal example from 1989. Yet, with modern cloud infrastructure, handling tens of millions of data points in real time is routine. Users can query the system from any web browser and receive answers in seconds, a feat unimaginable in the early 1990s.

What Locus and similar platforms have done is fulfill the core idea of the early predictions: making environmental data accessible, interpretable, and actionable in real-time. Some key ways Locus’s current technology mirrors or advances the concepts from 1989 and 1992 include:

- Cloud-Based Centralization: Just as ITEMS had a central Oracle database, Locus has a central cloud database for each client, or even multitenant setups. The advantages now include scalability and remote access. Multiple stakeholders (including regulators, consultants, and the public) can access the same live data via web portals. In fact, for Los Alamos, an Intellus New Mexico public website was created, allowing anyone to view LANL’s monitoring data on a map with real-time updates. This kind of data transparency in real-time was only aspirational in the 90s; now it’s expected. As Locus notes, providing broad access to data with proper security can build public trust and enable collaborative problem-solving.

- Automation and Integration (Modernized): Locus’s platform integrates with GIS mapping tools, similar to ITEMS interfacing with AutoCAD/GIS. In the cloud version, it’s even smoother; maps and dashboards are built into the web interface, and IoT device feeds or mobile data collection apps can be plugged directly into the system. Reporting is highly automated; what once took a team weeks to compile (think of those quarterly reports for Fresh Kills with hundreds of plots) can now be generated by the software on demand, often with interactive visualizations. AI Agents can make this reporting consultantless. Alerts can be set so that if a contaminant level exceeds a standard, the system notifies managers immediately, echoing the idea of an “intelligent database with an AI layer” that flags exceedances upon data arrival.

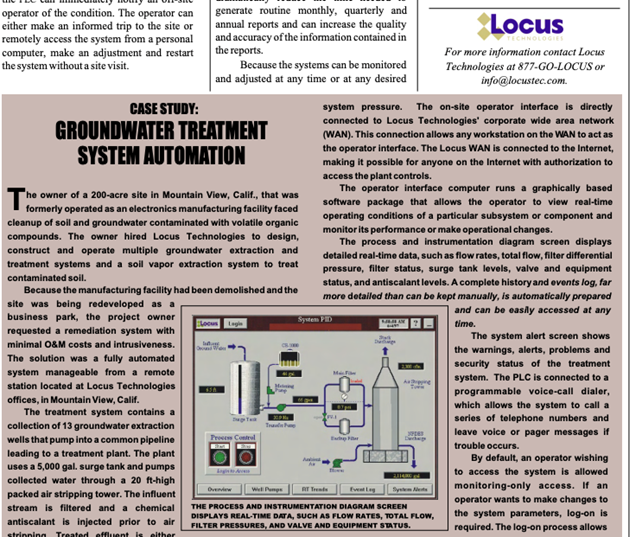

- Big Data Analytics and AI: Perhaps the most exciting development, and one directly in line with Duplan and Buckle’s foresight, is the application of artificial intelligence and machine learning on the massive datasets accumulated. Locus has begun exploring AI in areas such as natural language processing of regulations (to check compliance requirements automatically) and machine learning for pattern detection in complex datasets. One example is using AI to sift through continuous emissions monitoring data, instantly detecting anomalies and even automatically controlling systems, such as shutting off a discharge if a pollutant spike is detected. This is essentially the realization of real-time decision-making on-site that was envisioned in 1989. In 1989, Civil Engineering Magazine published Locus’s article “Automatic Savings”. This time, Duplan went further to predict how IoT technology would get integrated, and routine tasks like monitoring and automation of treatment plant operation can be integrated into the same cloud database.

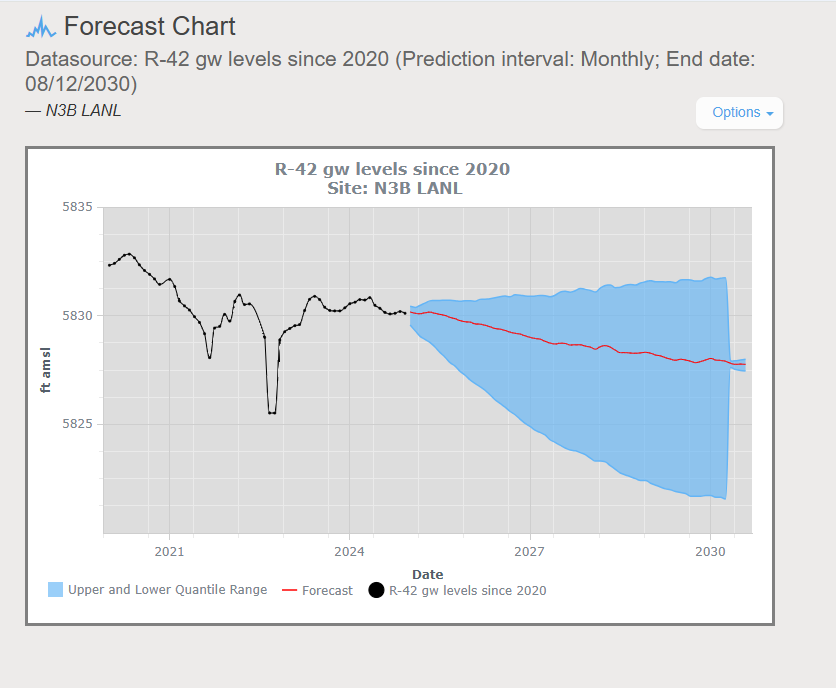

- Another area is predictive analytics, which involves using historical data to forecast future conditions. For instance, with decades of groundwater data across many sites, AI models can be trained to predict how a contaminant plume might move or when it might reach a receptor, far faster (and potentially more accurately) than manual modeling. Locus has suggested that “predictive analytics based on big data and AI will make customer data (legacy and new) work harder… engineers and geologists can improve on selecting the site remedy that will lead to faster and less expensive cleanup.” This directly echoes the sentiments of the 1989 article: reduce the number of samples, focus on targeted investigations, and implement more effective cleanups using intelligent tools.

- Leveraging Anonymized, Aggregated Data: One advantage Locus has, with an extensive roster of customers across various industries (including energy companies, federal labs, and municipalities), is the ability to analyze environmental data in aggregate, with identifying details removed. These anonymized, geographically diverse datasets are a treasure trove for understanding broader environmental trends. For example, if you have hundreds of sites’ worth of groundwater measurements, an AI could potentially identify patterns that a single-site view would miss. This could include recognizing the subtle signatures of an emerging contaminant, identifying correlations between specific pollutants and certain health outcomes at the regional level, or learning what early warning signs can be revealed by the data, for example, the development of a pollution hotspot. The idea of predicting cancer clusters or disease outbreaks from environmental data is ambitious, but not far-fetched. Public health researchers increasingly use machine learning to correlate pollution exposure with health records. A company like Locus could collaborate in such efforts by providing the environmental side of the data equation (contaminant levels, locations, durations) while health agencies provide disease incidence data. With AI, one might detect, for example, that communities with particular patterns of volatile organic compound (VOC) groundwater plumes later saw elevated cancer rates – a signal to intervene much earlier in similar cases. In short, the scale and scope of data now available allow AI to find needles in the haystack that humans might never spot.

- Customer Base and Environmental Responsibility: Locus’s clientele itself speaks to the mainstreaming of these ideas. Los Alamos National Lab’s decision to entrust its environmental stewardship data to a cloud platform demonstrates confidence in modern data management for high-stakes scenarios (nuclear and chemical legacies). Other customers include governmental bodies and Fortune 500 companies, all of whom face public and regulatory scrutiny. By using such platforms, they can more credibly demonstrate environmental compliance and proactive risk management. For instance, if an official or a concerned citizen asks, “Is our water safe?”, a site using Locus EIM could provide current data in seconds, whereas 30 years ago it might have taken days to compile an answer. This responsiveness is a key aspect of being a responsible environmental actor today. It also, crucially, helps avoid the pitfalls of the past where data was available but not shared or acted upon. Locus’s approach of data transparency, real-time access, and analysis is a direct antidote to the data hoarding and delays that exacerbated many environmental disasters.

Figure 4: 1998 ASCE Civil Engineering article “Automatic Savings”

To sum up, the early ideas from 1989 and 1992 have not only taken hold; in some cases, they have been far surpassed in capability by existing systems. Duplan and Buckle envisioned expert systems assisting humans; today, we have AI algorithms that can go even further by identifying patterns invisible to us. Another of their aspirations was for faster decision-making; today, we have instantaneous dashboards and alerts. The size and complexity of the datasets generated in those early years were unprecedented and presented numerous analysis hurdles; today’s datasets are gargantuan, yet manageable with cloud computing. The continuous thread is the emphasis on data-driven decision-making in environmental management – a thread that Locus and its peers have woven into the fabric of modern environmental compliance and remediation.

However, with great power comes great responsibility. As we look at the contemporary landscape, it’s clear that technology alone isn’t enough; how we use it ethically and effectively is what truly determines outcomes. The following section will delve into cultural touchstones that highlight the consequences of poor environmental data management and underscore why “taming” data – and acting on it – is so critical.

Figure 5: AI-generated predictive analytics directly from Locus EIM

Neno Duplan

Founder & CEO

As Founder and CEO of Locus Technologies, Dr. Duplan spent his career combining his understanding of environmental science with a vision of how to gather, aggregate, organize, and analyze environmental data to help organizations better manage and report their environmental and sustainability footprints. During the 1980’s, while conducting research as a graduate student at Carnegie Mellon, Dr. Duplan developed the first prototype system for an environmental information management database. This discovery eventually lead to the formation of Locus Technologies in 1997.

As technology evolved and new guidelines for environmental stewardship expanded, so has the vision Dr. Duplan has held for Locus. With the company’s deployment of the world’s first commercial Software-as-Service (SaaS) product for environmental information management in 1999 to the Locus Mobile solution in 2014, today Dr. Duplan continues to lead and challenge his team to be the leading provider of cloud-based EH&S and sustainability software.

Dr. Duplan holds a Ph.D. in Civil Engineering from the University of Zagreb, Croatia, an M.S. in Civil Engineering from Carnegie-Mellon, and a B.S. in Civil Engineering from the University of Split, Croatia. He also attended advanced Management Training at Stanford University.

Locus is the only self-funded water, air, soil, biological, energy, and waste EHS software company that is still owned and managed by its founder. The brightest minds in environmental science, embodied carbon, CO2 emissions, refrigerants, and PFAS hang their hats at Locus, and they’ve helped us to become a market leader in EHS software. Every client-facing employee at Locus has an advanced degree in science or professional EHS experience, and they incubate new ideas every day – such as how machine learning, AI, blockchain, and the Internet of Things will up the ante for EHS software, ESG, and sustainability.