Clean Data as the Cornerstone of Digital Transformation:

Why Locus’s Approach Matters More Than Ever

By Neno Duplan

Introduction

Locus Technologies, with its pioneering cloud-native, multitenant architecture, has long recognized this truth. Locus’s Environmental Information Management (EIM) and Locus Platform (LP) applications were designed from the outset to ensure data integrity, eliminate silos, and enable organizations to transform information into actionable intelligence. As competitors have merged into roll-ups or maintained fragmented on-premise systems, Locus has consistently invested in delivering a unified SaaS platform where clean, trustworthy data is the foundation for every decision.

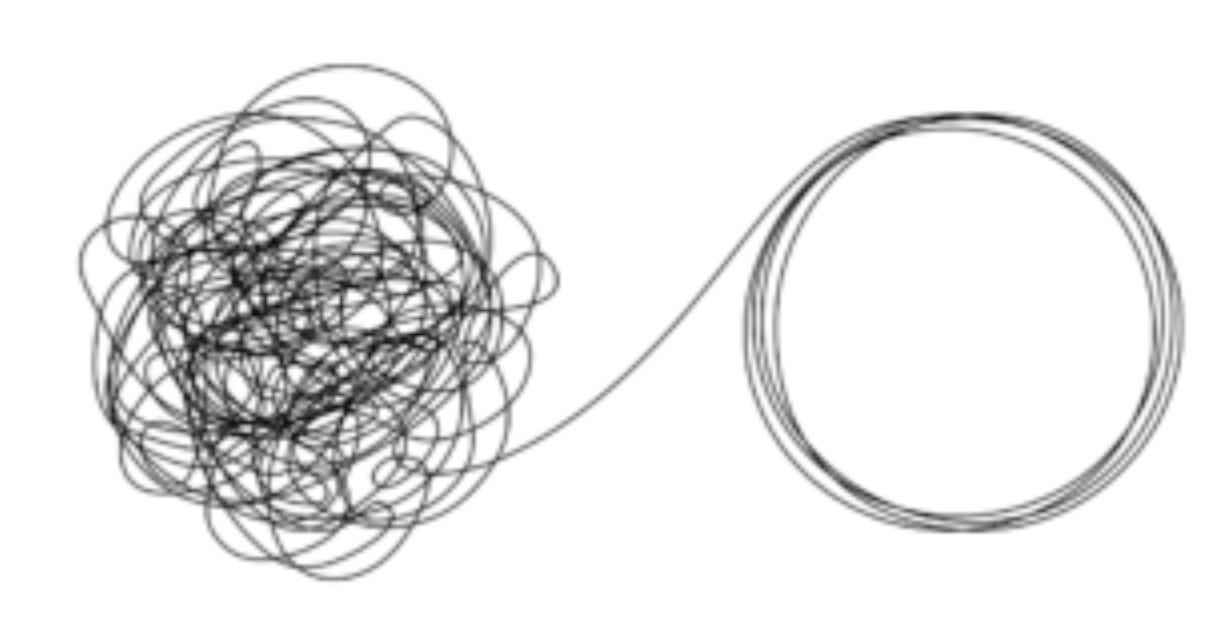

What if some data were dirty?

This paper updates the argument presented initially in “Dirty Data Blights the Bottom Line,” demonstrating how Locus’s approach is even more relevant today. It examines why clean data is essential to AI and LLM adoption, explores the significant costs associated with poor information management, and outlines the advantages Locus delivers to customers seeking long-term value.

Dirty Data, Dirty Costs

The lesson remains: poor data quality doesn’t just create inefficiency, it undermines confidence in science, compliance, and ultimately business outcomes. Gartner has long estimated that bad data costs organizations an average of 15–25% of their revenue. In the environmental sector, where decades of monitoring, sampling, and regulatory reporting are the norm, these costs accumulate to billions of dollars annually.

Data capture.

Organizations often fail to control this problem because they outsource both sampling and data management to consultants. While consultants may focus on field or lab innovations, they have little incentive to modernize information management, since inefficiencies generate more billable hours. Companies, meanwhile, fail to demand better systems, assuming monitoring is less expensive than cleanup. In reality, as Locus has documented, long-term monitoring costs often dwarf initial remediation, especially when compounded by poor data management practices.

Why Clean Data Is the Bedrock of AI and LLMs

Six years ago, the urgency of data quality was framed around regulatory compliance and operational cost. Today, the conversation must be expanded: clean data is the fuel for AI and large language models.

LLMs, such as ChatGPT and domain-specific AI agents, are only as good as the data on which they are trained or fine-tuned. If organizations feed these models incomplete, inconsistent, or inaccurate environmental data, the resulting insights will be flawed at best and dangerously misleading at worst. Clean data enables:

- Trustworthy AI outputs – AI cannot reason around corrupted or inconsistent records.

- Advanced analytics – Trend detection, anomaly identification, and predictive modeling all require structured, validated datasets.

- Regulatory defensibility – AI-driven reporting must be transparent and verifiable to meet the requirements of regulators and stakeholders.

- Efficiency in model training – High-quality, normalized data reduces costs in preparing datasets for machine learning.

Dirty data may be as serious problem as dirty water.

Without rigorous data management, investments in AI will fail—just as earlier generations of CRM and ERP projects collapsed under the weight of dirty data. Locus anticipated this challenge decades ago, and its platform is designed to centralize, standardize, and validate data at scale, making it uniquely ready for the AI era.

Locus’s Technology Advantage

- Cloud-Native Multitenancy

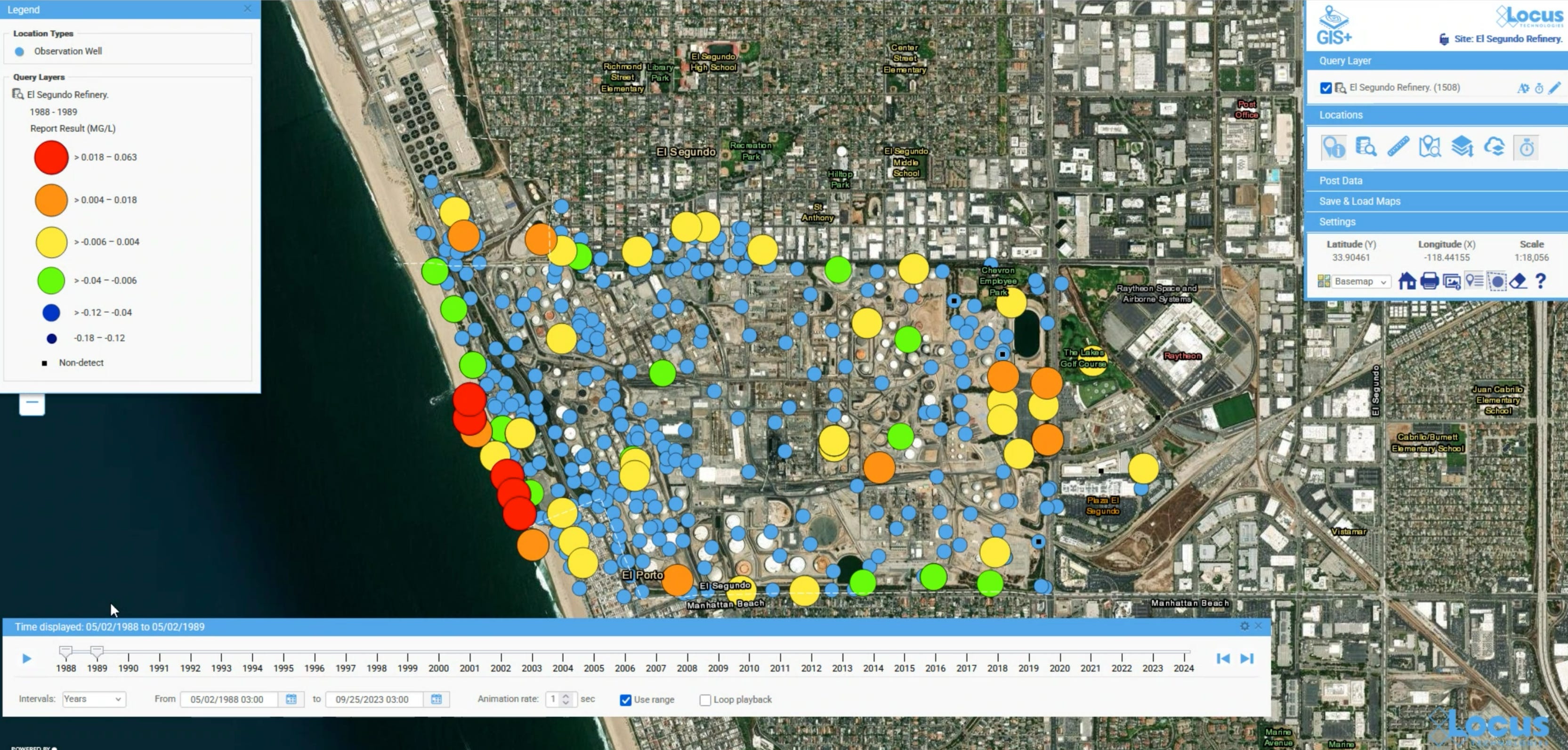

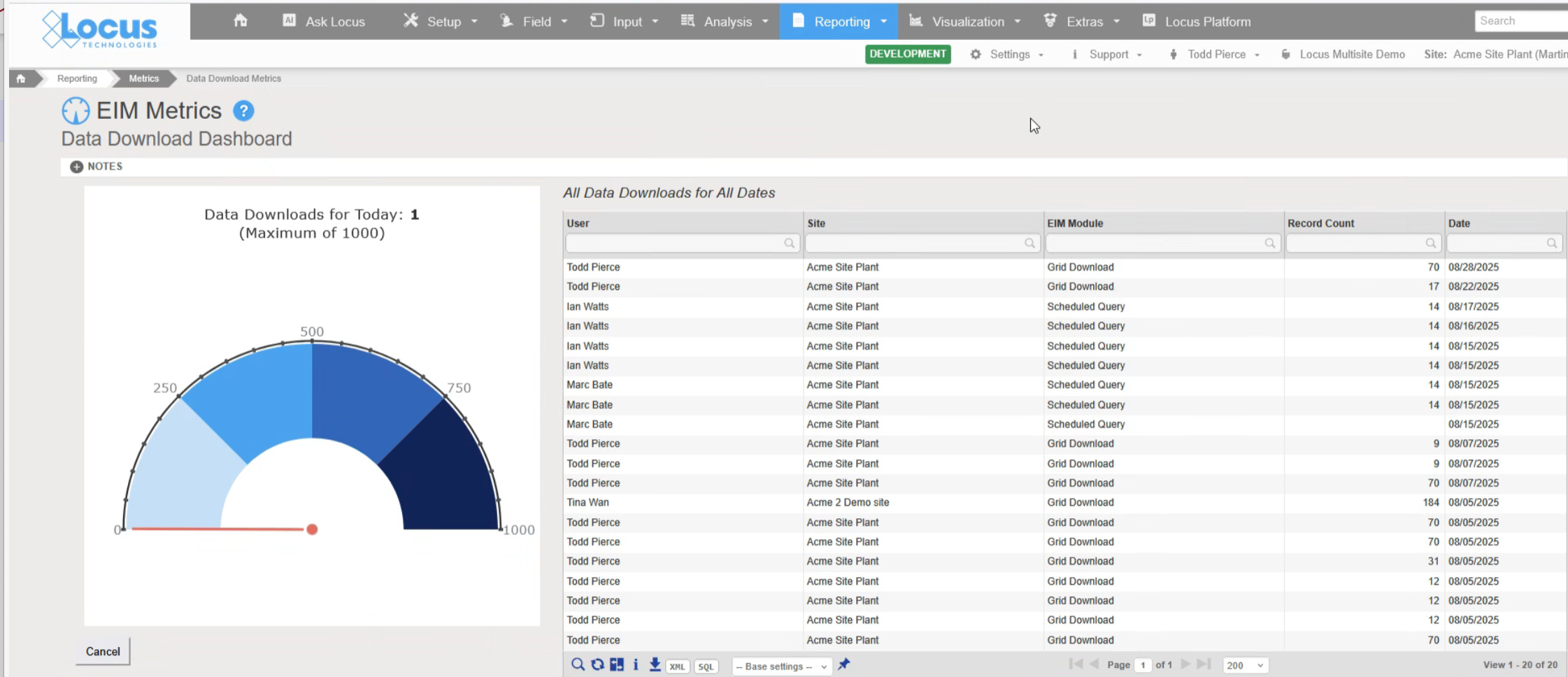

From its founding in 1997, Locus has been fully SaaS and multitenant—unlike competitors who started with siloed, on-premise systems and later patched them for the cloud. This architecture ensures continuous improvement, lower costs, and seamless integration of new tools like AI, without requiring disruptive migrations. - Centralized, Configurable Data Management

EIM and LP consolidate information across consultants, labs, and sites into a single repository. Standardized workflows, validation tools, and metadata-driven configurability ensure that data is consistent and clean. Customers gain control of their data rather than being locked into consultant databases. - AI-Ready Platform

With OneView, Locus has introduced AI-enabled dashboards, natural language queries, and predictive modeling, all powered by high-quality datasets. This positions Locus customers to leverage generative AI and digital twins with confidence—something competitors cannot deliver without major reengineering.

Photo by Becca Lavin on Unsplash; Dirty data creates problems for companies.

Industry Trends Confirm the Urgency

At the same time, companies are under pressure to improve their long-term stewardship of contaminated sites, manage complex water systems, and integrate sustainability into their core operations. Each of these challenges requires not just technology, but clean and consistent data as its foundation.

Conclusion: Data Quality as a Strategic Imperative

Locus’s approach—centralized, cloud-native, multitenant, and AI-ready—offers customers a proven solution to this challenge. By prioritizing clean data as the cornerstone of environmental and sustainability information management, Locus enables customers not only to save money and improve compliance but also to unlock the full potential of AI and digital transformation.

The future of environmental management and ESG reporting will belong to the companies that recognize this truth. Locus has been preparing for it for nearly three decades.

For more information, please visit www.locustec.com.

Neno Duplan

Founder & CEO

As Founder and CEO of Locus Technologies, Dr. Duplan spent his career combining his understanding of environmental science with a vision of how to gather, aggregate, organize, and analyze environmental data to help organizations better manage and report their environmental and sustainability footprints. During the 1980’s, while conducting research as a graduate student at Carnegie Mellon, Dr. Duplan developed the first prototype system for an environmental information management database. This discovery eventually lead to the formation of Locus Technologies in 1997.

As technology evolved and new guidelines for environmental stewardship expanded, so has the vision Dr. Duplan has held for Locus. With the company’s deployment of the world’s first commercial Software-as-Service (SaaS) product for environmental information management in 1999 to the Locus Mobile solution in 2014, today Dr. Duplan continues to lead and challenge his team to be the leading provider of cloud-based EH&S and sustainability software.

Dr. Duplan holds a Ph.D. in Civil Engineering from the University of Zagreb, Croatia, an M.S. in Civil Engineering from Carnegie-Mellon, and a B.S. in Civil Engineering from the University of Split, Croatia. He also attended advanced Management Training at Stanford University.

Locus is the only self-funded water, air, soil, biological, energy, and waste EHS software company that is still owned and managed by its founder. The brightest minds in environmental science, embodied carbon, CO2 emissions, refrigerants, and PFAS hang their hats at Locus, and they’ve helped us to become a market leader in EHS software. Every client-facing employee at Locus has an advanced degree in science or professional EHS experience, and they incubate new ideas every day – such as how machine learning, AI, blockchain, and the Internet of Things will up the ante for EHS software, ESG, and sustainability.